A/B testing is the practice of showing two variants of the same web page to different segments of visitors at the same time and comparing which variant drives more conversions. In an A/B test, the most important thing is goals that decide a winning test. So, if can we do proper QA/Troubleshoot to check each goal are working that will serve our AB tasting purpose well.

We work hard to make AB test work in properly, but sometimes technology doesn’t work the way you expect it to. For those less-happy moments, VWO provides several ways to troubleshoot your experiment or campaign.

Tools for QA:

- Result page: helps you to view result for each goal and the good news is that it updates the result immediately.

- Network console: helps you verify whether events in a live experiment are firing correctly.

- Browser cookie: helps you verify whether events in a live experiment are firing correctly. It’s stored all the information about all types of goals.

Among all of them, I will say the browser cookie is your best friend. This contains all the information that developers need for troubleshooting experiments, audiences and goals QA.

Browser cookie:

VWO log all records events that occur as you interact with a page on your browser’s cookie. When you trigger an event in VWO it fires a tracking call and stores that information on the browser’s cookie.

To access on browser cookie tab:

- Right-click on the page. From the dropdown menu, select Inspect in Chrome or Inspect Element in Firefox.

- Select the Application/Storage tab.

- Select the Cookies tab.

- Select the Domain name of your site.

- Filter with “_vis_opt_exp_”.

- More specific for a campaign filter with “_vis_opt_exp_{CAMPAIGNID}_goal_”.

You can see the list of all events (all types of goals like click, custom, transection etc) that fired. VWO has a specific number of each goal. I have highlighted the events for few goals on the below screenshot.

VWO stores almost all information (that needed for a developer to troubleshoot) in the browser cookies; like experiments, audiences/segments, goals, users, referrers, session etc. You can find the details about VWO cookies from here.

Network console:

The network panel is a log in your browser that records events that occur as you interact with a page. When you trigger an event in VWO it fires a tracking call, which is picked up in the network traffic.

To access on network tab:

- Right-click on the page. From the dropdown menu, select Inspect in Chrome or Inspect Element in Firefox.

- Select the Network tab.

- Filter with “ping_tpc”.

- Click to fire the event you’d like to see the details.

You can see the list of all events that fired. I have highlighted the event that has a specific experiment and goal ID on the below screenshot.

Note: If have already bucketed in an experiment and fired few goals you might not see any network calls. So always go to a fresh incognito browser to troubleshoot goals/experiments.

As VWO immediately update campaign results so it’s always another good option to check result page. But make sure you are the only visitor at that time who is seeing the experiment.

A/B testing is the practice of showing two variants of the same web page to different segments of visitors at the same time and comparing which variant drives more conversions. In an A/B test, the most important thing is goals that decide a winning test. So, if can we do proper QA/Troubleshoot to check each goal are working that will serve our AB tasting purpose well.

We work hard to make AB test work in properly, but sometimes technology doesn’t work the way you expect it to. For those less-happy moments, VWO provides several ways to troubleshoot your experiment or campaign.

Tools for QA:

- Result page: helps you to view result for each goal and the good news is that it updates the result immediately.

- Network console: helps you verify whether events in a live experiment are firing correctly.

- Browser cookie: helps you verify whether events in a live experiment are firing correctly. It’s stored all the information about all types of goals.

Among all of them, I will say the browser cookie is your best friend. This contains all the information that developers need for troubleshooting experiments, audiences and goals QA.

Browser cookie:

VWO log all records events that occur as you interact with a page on your browser’s cookie. When you trigger an event in VWO it fires a tracking call and stores that information on the browser’s cookie.

To access on browser cookie tab:

- Right-click on the page. From the dropdown menu, select Inspect in Chrome or Inspect Element in Firefox.

- Select the Application/Storage tab.

- Select the Cookies tab.

- Select the Domain name of your site.

- Filter with “_vis_opt_exp_”.

- More specific for a campaign filter with “_vis_opt_exp_{CAMPAIGNID}_goal_”.

You can see the list of all events (all types of goals like click, custom, transection etc) that fired. VWO has a specific number of each goal. I have highlighted the events for few goals on the below screenshot.

VWO stores almost all information (that needed for a developer to troubleshoot) in the browser cookies; like experiments, audiences/segments, goals, users, referrers, session etc. You can find the details about VWO cookies from here.

Network console:

The network panel is a log in your browser that records events that occur as you interact with a page. When you trigger an event in VWO it fires a tracking call, which is picked up in the network traffic.

To access on network tab:

- Right-click on the page. From the dropdown menu, select Inspect in Chrome or Inspect Element in Firefox.

- Select the Network tab.

- Filter with “ping_tpc”.

- Click to fire the event you’d like to see the details.

You can see the list of all events that fired. I have highlighted the event that has a specific experiment and goal ID on the below screenshot.

Note: If have already bucketed in an experiment and fired few goals you might not see any network calls. So always go to a fresh incognito browser to troubleshoot goals/experiments.

As VWO immediately update campaign results so it’s always another good option to check result page. But make sure you are the only visitor at that time who is seeing the experiment.

A/B testing is a marketing technique that involves comparing two versions of a web page or application to see which performs better. AB test developing within AB Tasty has few parallels with conventional front-end development. Where the most important thing is goals that decide a winning test. So, if can we do proper QA/Troubleshoot to check each goal are working that will serve our AB tasting purpose well.

We work hard to make AB test work in properly, but sometimes technology doesn’t work the way you expect it to. For those less-happy moments, AB Tasty provides several ways to troubleshoot your experiment or campaign.

Tools for QA:

- Preview link: helps you to view variation and move one variation to another, you can also track click goals by enabling “Display Click tracking info’s”.

- Network console: helps you verify whether events in a live experiment are firing correctly.

- Local storage: helps you verify whether events in a live experiment are firing correctly. It’s stored all the information about all click & custom goals.

Among all of them, I will say the network tab is your best friend. This contains all the information that developers need for troubleshooting experiments, audiences, goals QA and code execution on page load.

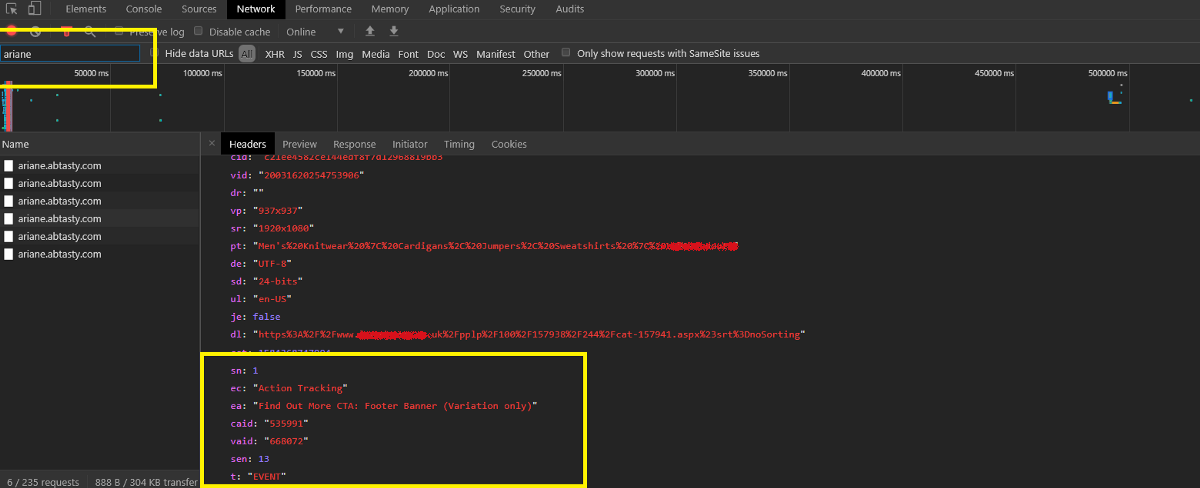

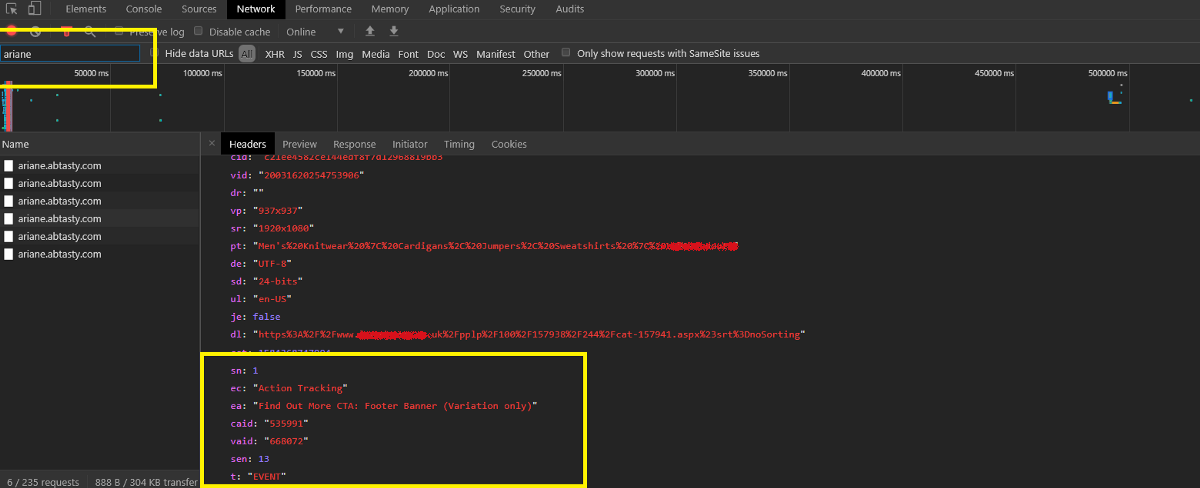

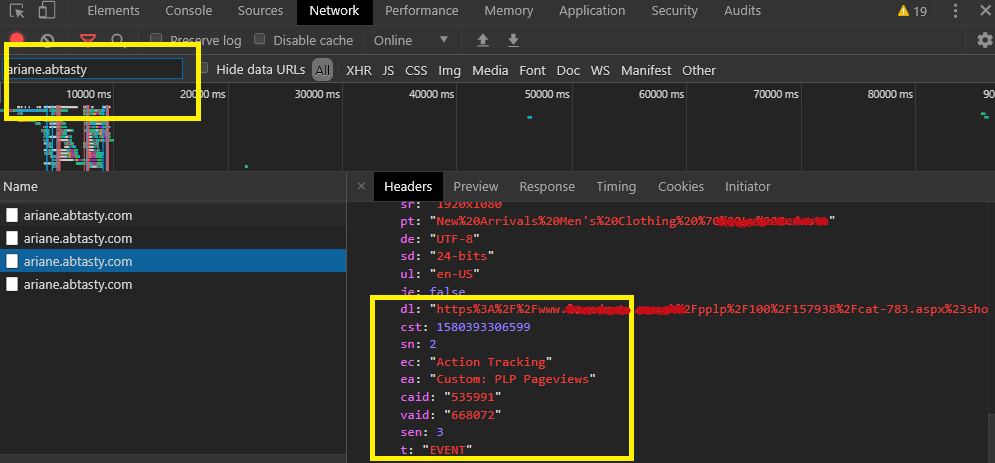

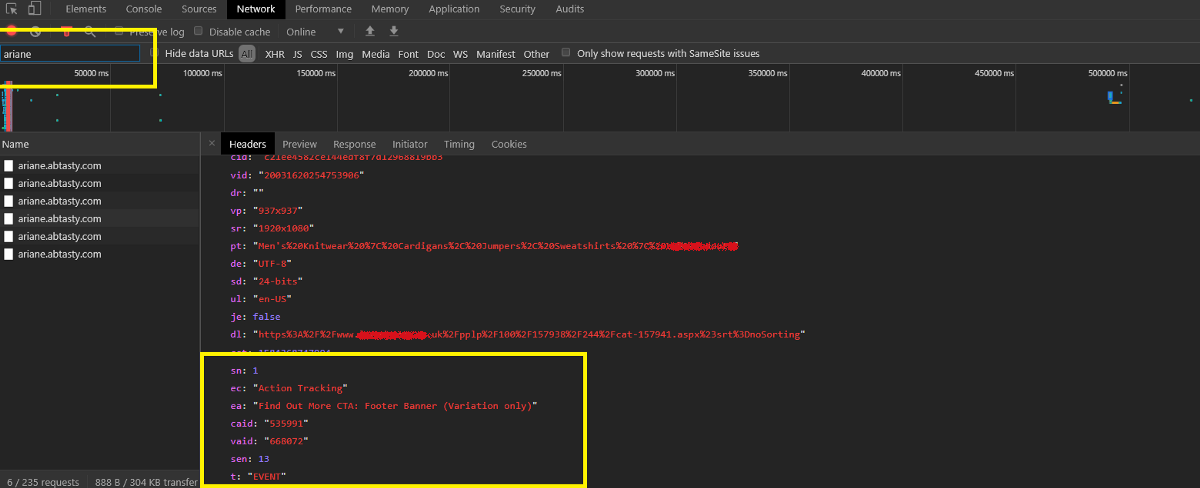

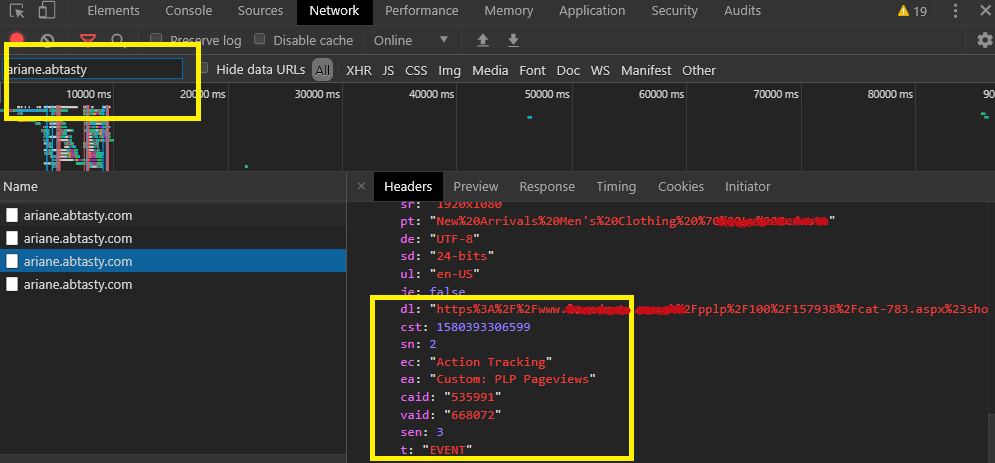

Network console:

The network panel is a log in your browser that records events that occur as you interact with a page. When you trigger an event in AB Tasty it fires a tracking call, which is picked up in the network traffic.

To access on network tab:

- Right-click on the page. From the dropdown menu, select Inspect in Chrome or Inspect Element in Firefox.

- Select the Network tab.

- Filter with “datacollectAT” or “ariane.abtasty”.

- Click to fire the event you’d like to see the details.

You can see the list of all events(click/custom/transection) that fired. I have highlighted the events name for click/custom goals on the bellow screenshot.

Custom goals are work with the same API call as click goals (so it’s also tracked as an event). That’s why we add a text ‘Custom’ before all custom goals to differentiate between click and custom goals.

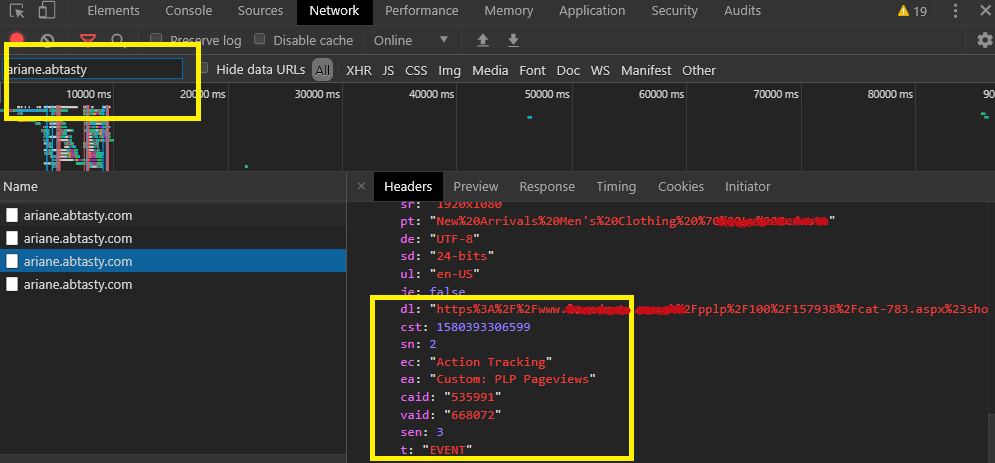

You can see the list of custom events that fired on the bellow screenshot.

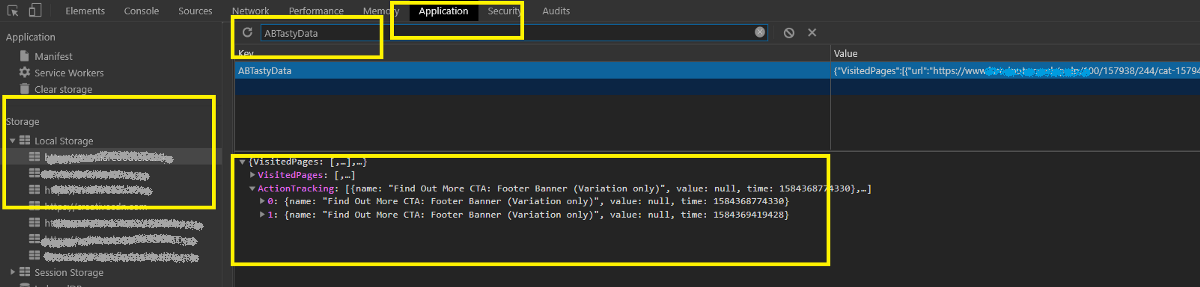

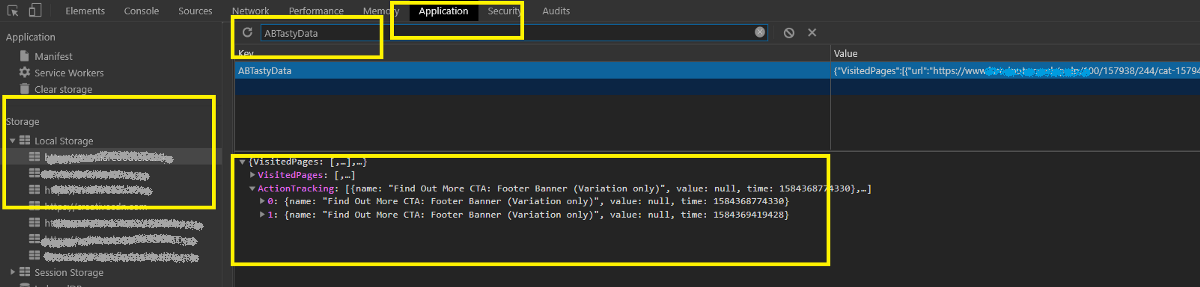

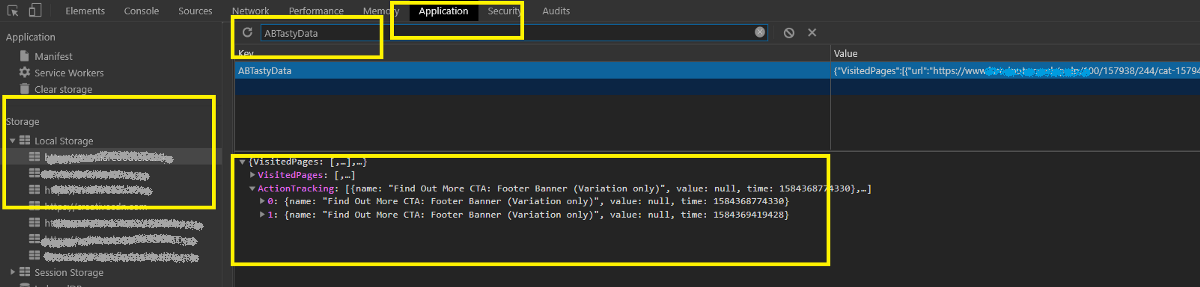

Local storage:

AB tasty log all records events that occur as you interact with a page on your browser Local storage. When you trigger an event in AB Tasty it fires a tracking call and stores that information on browser local storage.

To access on Local storage tab:

- Right-click on the page. From the dropdown menu, select Inspect in Chrome or Inspect Element in Firefox.

- Select the Application/Storage tab.

- Select the Local storage tab.

- Select the Domain name of your site.

- Filter with “ABTastyData”.

- Click to ABTastyData you’d like to see the details.

You can see the list of all events(click/custom/transection) that fired. I have highlighted the events name for click/custom goals on the bellow screenshot.

Note: For pageview goal, we have to rely on AB Tasty campaign result page, but the bad news is that it does not update immediately, need to wait 3-4 hours to see the reflections.

We cannot check pageview for AB Tasty by Network console/ Local storage as it’s work differently; It’s tracked the page URL for each of the page and record under each campaign(It has other benefits, like; we can filter the result with any URL without adding it as a pageview goal). AB Tasty manipulates all the goal along with the pageview goals in a certain period and updates that specific campaign results.

A/B testing is a marketing technique that involves comparing two versions of a web page or application to see which performs better. AB test developing within AB Tasty has few parallels with conventional front-end development. Where the most important thing is goals that decide a winning test. So, if can we do proper QA/Troubleshoot to check each goal are working that will serve our AB tasting purpose well.

We work hard to make AB test work in properly, but sometimes technology doesn’t work the way you expect it to. For those less-happy moments, AB Tasty provides several ways to troubleshoot your experiment or campaign.

Tools for QA:

- Preview link: helps you to view variation and move one variation to another, you can also track click goals by enabling “Display Click tracking info’s”.

- Network console: helps you verify whether events in a live experiment are firing correctly.

- Local storage: helps you verify whether events in a live experiment are firing correctly. It’s stored all the information about all click & custom goals.

Among all of them, I will say the network tab is your best friend. This contains all the information that developers need for troubleshooting experiments, audiences, goals QA and code execution on page load.

Network console:

The network panel is a log in your browser that records events that occur as you interact with a page. When you trigger an event in AB Tasty it fires a tracking call, which is picked up in the network traffic.

To access on network tab:

- Right-click on the page. From the dropdown menu, select Inspect in Chrome or Inspect Element in Firefox.

- Select the Network tab.

- Filter with “datacollectAT” or “ariane.abtasty”.

- Click to fire the event you’d like to see the details.

You can see the list of all events(click/custom/transection) that fired. I have highlighted the events name for click/custom goals on the bellow screenshot.

Custom goals are work with the same API call as click goals (so it’s also tracked as an event). That’s why we add a text ‘Custom’ before all custom goals to differentiate between click and custom goals.

You can see the list of custom events that fired on the bellow screenshot.

Local storage:

AB tasty log all records events that occur as you interact with a page on your browser Local storage. When you trigger an event in AB Tasty it fires a tracking call and stores that information on browser local storage.

To access on Local storage tab:

- Right-click on the page. From the dropdown menu, select Inspect in Chrome or Inspect Element in Firefox.

- Select the Application/Storage tab.

- Select the Local storage tab.

- Select the Domain name of your site.

- Filter with “ABTastyData”.

- Click to ABTastyData you’d like to see the details.

You can see the list of all events(click/custom/transection) that fired. I have highlighted the events name for click/custom goals on the bellow screenshot.

Note: For pageview goal, we have to rely on AB Tasty campaign result page, but the bad news is that it does not update immediately, need to wait 3-4 hours to see the reflections.

We cannot check pageview for AB Tasty by Network console/ Local storage as it’s work differently; It’s tracked the page URL for each of the page and record under each campaign(It has other benefits, like; we can filter the result with any URL without adding it as a pageview goal). AB Tasty manipulates all the goal along with the pageview goals in a certain period and updates that specific campaign results.

Over the years, I have heard many people saying that their consultants, and in some cases, the developer who is building the test is also doing the QA of the variations. This is potentially hazardous and prone to missing out on finding bugs before the test is live. As a result, the research and data-backed hypothesis test or A/B test could bring back incorrect results. In this article, I have summarized six key reasons as to why you should be doing independent manual QA of all of your variations.

1. Developers and consultants are too close to the test:

Your developer and potentially your consultant are too close to the test that they are building – making it very easy to miss out small but important details if they are in charge of QA

2. Emulators are not the real thing:

“Vege hotdog tastes the same as the real hotdog” Sorry but they are not the same. Your end-users will not use an emulator – they will use the real device and browser. If you are not manually checking them on actual device/browsers, there is a potential that you will miss out on finding issues specific to real browsers

3. Interactions:

If you are not manually checking the variations, you might miss out on issues related to interactions to the page/variations. This could be opening an accordion, clicking on a button or going through the funnel itself.

4. Checking goal firing:

If you are not doing a QA across all browsers manually, you might not be able to test out whether your metrics setup are correct. In a worst-case scenario, you might look at your result after a couple of weeks and notice that your primary metric did not fire properly for some browsers or at all!

5. Breakpoints and changing device display mode:

If you are using emulators, you might miss out any issues related to switching the mode from Portrait to Landscape or vice versa. By QAing the variations on actual mobile/tablet devices, you can easily spot check if the variation is displaying correctly on both modes, but also when the user is switching between the two, the behaviour is as it should.

6. Tests from a Human Perspective:

Manual QA helps to quickly identify when something looks “off.” Automated test scripts don’t pick up these visual issues. When a QA Engineer interacts with a website or software as a user would, they’re able to discover usability issues and user interface glitches. Automated test scripts can’t test for these things.

This is why, here at EchoLogyx, our dedicated QA Engineers always use actual devices and test all variations on the targeted browsers to find issues. They have to be thorough to make sure that no bugs are present in any variations or any development work before we deliver the work. They need to check all possible scenarios and their target is to break the work that our engineers are doing. Essentially our QA team are the gatekeepers to approve whether the test is ready to go live or not. This significantly reduces the risk of getting a bad user experience to the end-users who will be using the site.

Over the years, I have heard many people saying that their consultants, and in some cases, the developer who is building the test is also doing the QA of the variations. This is potentially hazardous and prone to missing out on finding bugs before the test is live. As a result, the research and data-backed hypothesis test or A/B test could bring back incorrect results. In this article, I have summarized six key reasons as to why you should be doing independent manual QA of all of your variations.

1. Developers and consultants are too close to the test:

Your developer and potentially your consultant are too close to the test that they are building – making it very easy to miss out small but important details if they are in charge of QA

2. Emulators are not the real thing:

“Vege hotdog tastes the same as the real hotdog” Sorry but they are not the same. Your end-users will not use an emulator – they will use the real device and browser. If you are not manually checking them on actual device/browsers, there is a potential that you will miss out on finding issues specific to real browsers

3. Interactions:

If you are not manually checking the variations, you might miss out on issues related to interactions to the page/variations. This could be opening an accordion, clicking on a button or going through the funnel itself.

4. Checking goal firing:

If you are not doing a QA across all browsers manually, you might not be able to test out whether your metrics setup are correct. In a worst-case scenario, you might look at your result after a couple of weeks and notice that your primary metric did not fire properly for some browsers or at all!

5. Breakpoints and changing device display mode:

If you are using emulators, you might miss out any issues related to switching the mode from Portrait to Landscape or vice versa. By QAing the variations on actual mobile/tablet devices, you can easily spot check if the variation is displaying correctly on both modes, but also when the user is switching between the two, the behaviour is as it should.

6. Tests from a Human Perspective:

Manual QA helps to quickly identify when something looks “off.” Automated test scripts don’t pick up these visual issues. When a QA Engineer interacts with a website or software as a user would, they’re able to discover usability issues and user interface glitches. Automated test scripts can’t test for these things.

This is why, here at EchoLogyx, our dedicated QA Engineers always use actual devices and test all variations on the targeted browsers to find issues. They have to be thorough to make sure that no bugs are present in any variations or any development work before we deliver the work. They need to check all possible scenarios and their target is to break the work that our engineers are doing. Essentially our QA team are the gatekeepers to approve whether the test is ready to go live or not. This significantly reduces the risk of getting a bad user experience to the end-users who will be using the site.